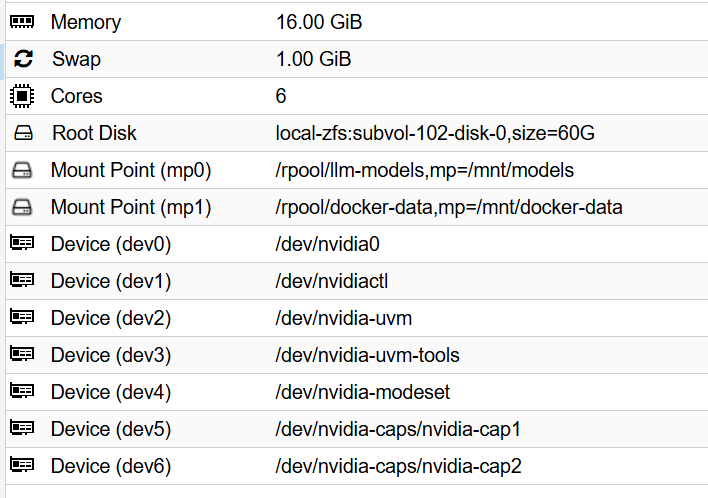

create the lxc and passthrough the gpu to it

dev0: /dev/nvidia0

dev1: /dev/nvidiactl

dev2: /dev/nvidia-uvm

dev3: /dev/nvidia-uvm-tools

dev4: /dev/nvidia-modeset

dev5: /dev/nvidia-caps/nvidia-cap1

dev6: /dev/nvidia-caps/nvidia-cap2

download the module and apps in host

apt update && apt upgrade -y && apt install pve-headers-$(uname -r) build-essential software-properties-common make nvtop htop -y

update-initramfs -u && reboot

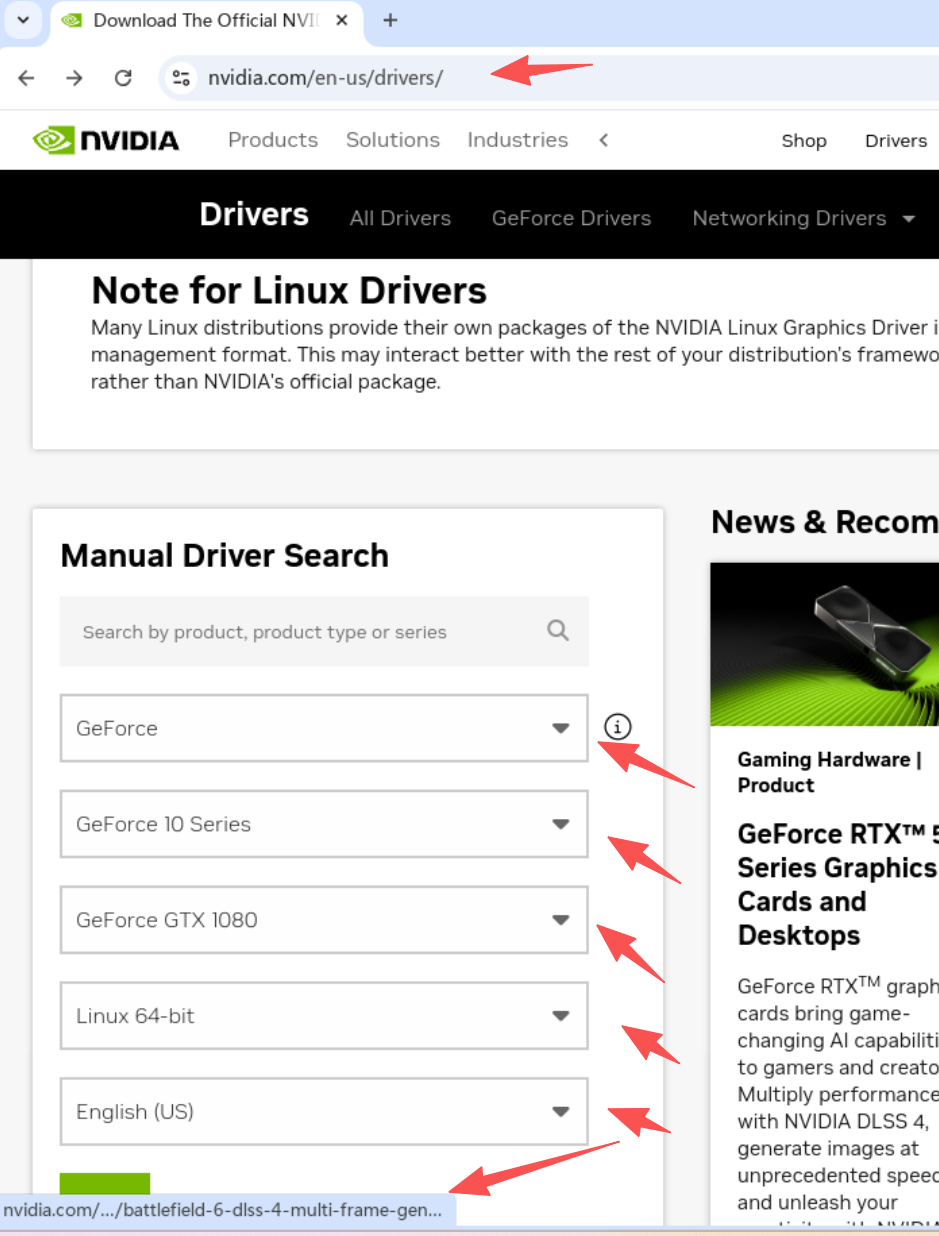

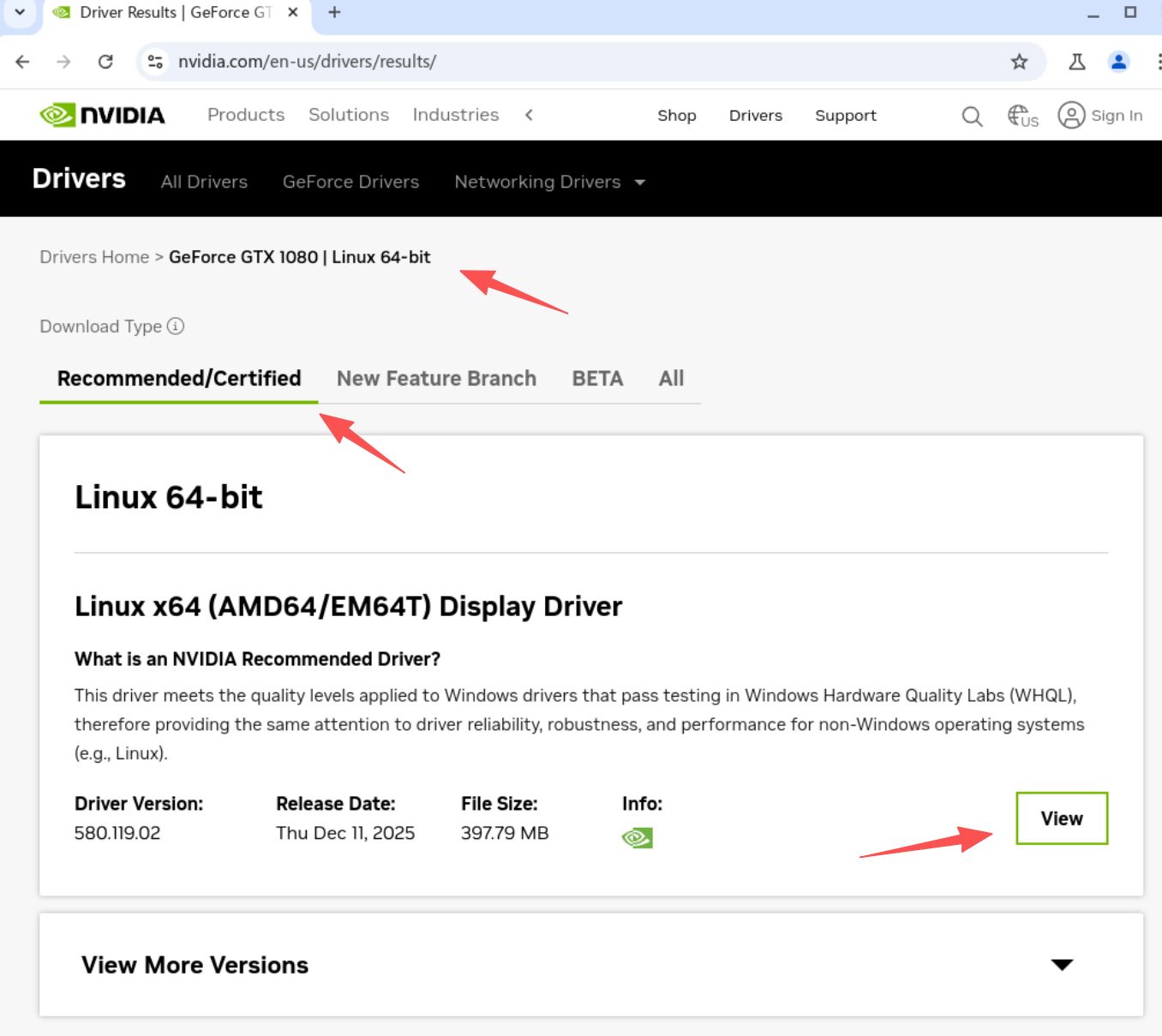

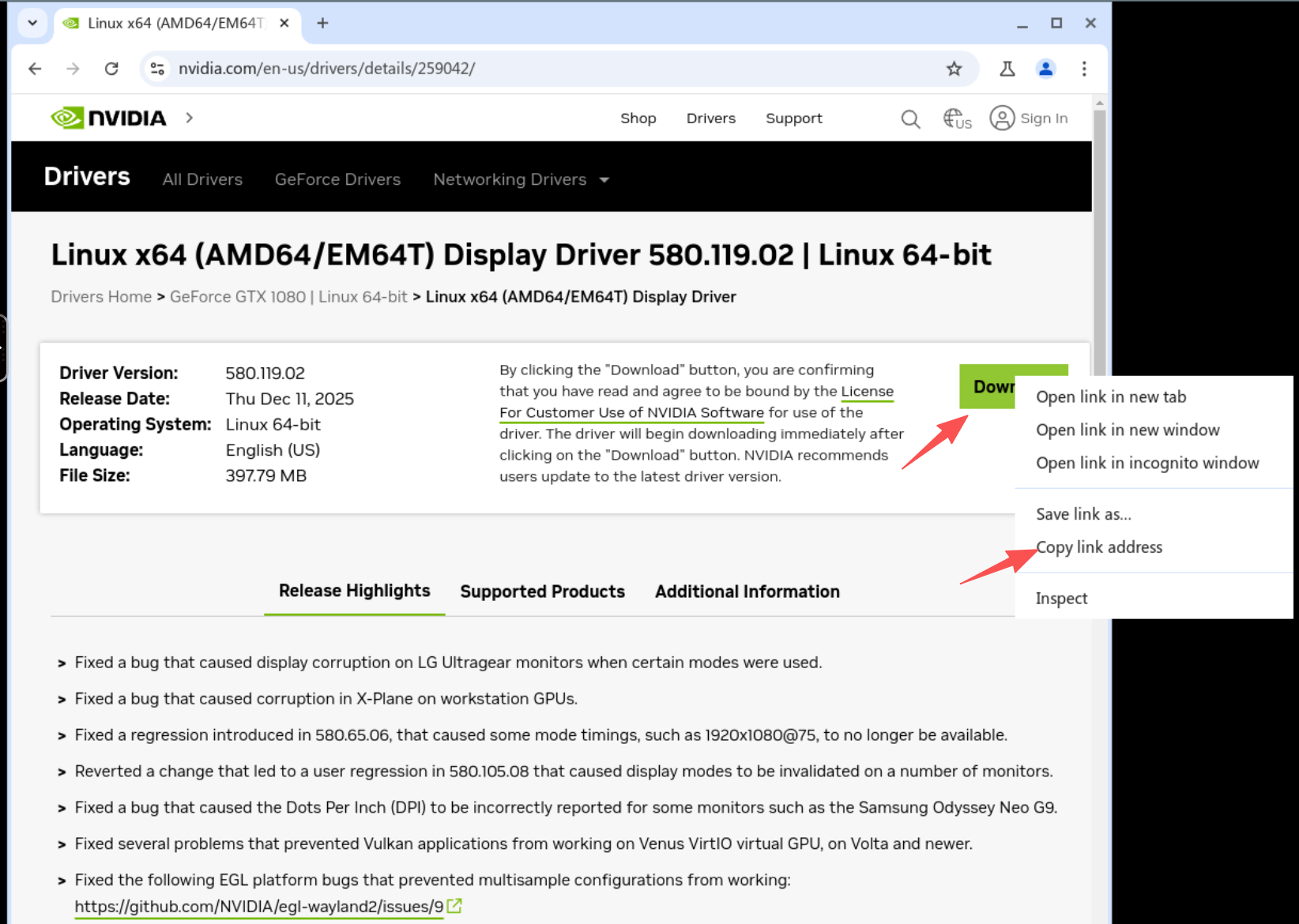

download the driver for host and lxc

nvidia.com/en-us/drivers

https://us.download.nvidia.com/XFree86/Linux-x86_64/580.119.02/NVIDIA-Linux-x86_64-580.119.02.run

find the download link and wget download

wget HTTP://URLHERE

chmod +x xxxxx.run

./xxxxx.run --dkms

use pct command in host to copy the driver from host to lxc

root@pve:~# pct push 101 NVIDIA-Linux-x86_64-xxxx.run /root/NVIDIA-Linux-x86_64-xxxx.run

install the driver in lxc with “no-kernel-modules” flag

./NVIDIA-Linux-x86_64-xxxx.run --no-kernel-modules

Install the NVIDIA Container Toolkit

- Add the repository

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

- Update and install

apt-get update

apt-get install -y nvidia-container-toolkit

apt install --no-install-recommends nvtop

Edit the config.toml and enable the no-cgroups and set it to true from false.

nano /etc/nvidia-container-runtime/config.toml

#no-cgroups = false

to

no-cgroups = true

lxc docker installation

- Ensure prerequisites are installed

apt update && apt install -y ca-certificates curl gnupg

- Download the GPG key correctly (Note the standard dashes)

curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /etc/apt/trusted.gpg.d/docker.gpg

- Set permissions

chmod a+r /etc/apt/trusted.gpg.d/docker.gpg

- Write the source list (Note the straight quotes and standard dashes)

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/trusted.gpg.d/docker.gpg] https://download.docker.com/linux/debian bookworm stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

- Update and Install

apt update &&

apt install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

config the lxc permission

- Open your LXC config (replace 102 with your ID)

nano /etc/pve/lxc/102.conf

- Add this line to the very bottom:

lxc.apparmor.profile: unconfined

- Save and Exit.

- Restart the LXC entirely.

pct stop 102

pct start 102

special volume config in dockge comment for the zfs optimized data set,

https://halo.gocat.top/archives/zfsraid0-she-zhi

dockge compose

services:

dockge:

image: louislam/dockge:latest

restart: always

ports:

- 5001:5001

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /mnt/docker-data/dockge:/app/data # Fast 16k storage

- /mnt/docker-data/stacks:/opt/stacks # Fast 16k storage

# (Optional) Map the models folder directly into your LLM container

- /mnt/models:/models # Efficient 1M storage

environment:

- DOCKGE_STACKS_DIR=/opt/stacks

ai-stack batch deployment yml

services:

ollama:

image: ollama/ollama:latest

container_name: ollama

restart: always

volumes:

- /mnt/models/ollama:/root/.ollama

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

restart: always

ports:

- 3000:8080

volumes:

- /mnt/docker-data/stacks/ai-lab/open-webui:/app/backend/data

environment:

- OLLAMA_BASE_URL=http://ollama:11434

- ENABLE_RAG_WEB_SEARCH=True

- RAG_WEB_SEARCH_ENGINE=searxng

- SEARXNG_QUERY_URL=http://searxng:8080/search?q=<query>

depends_on:

- ollama

- searxng

searxng:

image: searxng/searxng:latest

container_name: searxng

restart: always

volumes:

# MUST be a file on host, not a directory

- /mnt/docker-data/stacks/ai-lab/searxng/settings.yml:/etc/searxng/settings.yml

environment:

- SEARXNG_BASE_URL=http://searxng:8080

comfyui:

image: yanwk/comfyui-boot:cu124-slim

container_name: comfyui

restart: always

ports:

- 8188:8188

volumes:

# Mapping specifically to subfolders so we don't overwrite /root/ComfyUI/main.py

- /mnt/models/comfyui:/root/ComfyUI/models

- /mnt/docker-data/stacks/ai-lab/comfyui/custom_nodes:/root/ComfyUI/custom_nodes

- /mnt/docker-data/stacks/ai-lab/comfyui/output:/root/ComfyUI/output

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

Tools

This tells the open-webui container to ping the ollama container

docker exec -it ai-lab-open-webui-1 ping -c 3 ollama

refer:

https://digitalspaceport.com/proxmox-lxc-docker-gpu-passthrough-setup-guide/

评论区